Alfred Binet's IQ Test, 1905

Standardized testing. The very idea of taking one of these tests, facing those little bubbles, #2 pencil grimly in hand, is enough to make anybody shudder.

Transcript

What’s your number? I’m not hitting on you here; I don’t mean your phone number, or for that matter your ZIP code, or Social Security number—useful though they all are. No, I mean those numbers that everybody secretly wants to compare, and that can truly affect the course of your life: your SAT scores. Or your ACT scores, your GRE scores, your LSAT scores, TOEFL, GMAT, MCAT, ASVAB, and on and on.

Those scores count in so many ways; whether or not you advance in school or graduate, what college or graduate school you get into, what scholarships you win, your licensing as a professional, entry into the military. One can even save your life, in a more macabre way; in 2002 the Supreme Court in Atkins v. Virginia declared unconstitutional the execution of the mentally retarded, usually defined by an IQ score of at least 70 or so.

But where did that score, and the idea for that score, come from? Even though testing goes back at least as far as the 7th century imperial examinations for the Chinese civil service, standardized testing as we know it today began much more modestly, with a reserved French psychologist who was determined to find a simple way to quantify the emerging concept of intelligence, and in the process moved us down the road to an increasingly measured and numbered society.

I’m Joe Janes of the University of Washington Information School. Have your palms started to sweat yet? The very idea of taking one of these tests, facing those little bubbles, #2 pencil grimly in hand, is enough to make anybody shudder. While it may feel as though that’s an ancient, lizard- brain terror, it’s only in the last 150 years or so that people have thought seriously about trying to quantify intellectual ability. The concept of “intelligence” as something other than innate, God- given and universal only begins to arise in the mid-19th century, fueled by a number of factors, including the coalescing of psychology as a discipline and the development of more sophisticated techniques for statistical analysis of data.

Enter Alfred Binet, born into a family of doctors, but who went into law after his first unfortunate encounter with a cadaver. He then drifted into the new psychology and became fascinated by what we would now call child development, which seems to have begun with intense investigation and testing of his own two daughters Madeline and Alice. He began to form a notion of the sorts of abilities one might expect of a child as they grow, which he later came to call “mental age.” With a pupil-turned-colleague, Simon, he broadened his investigations to hundreds of children in schools and in mental institutions.

Then in 1905, after several frustrating years with little progress, the Paris school authorities were seeking a way to identify children who either needed extra assistance in being educated by virtue of their diminished ability, or who were completely unable to be helped by the schools. Binet and Simon took on the challenge and within months hit on a method using a series of questions, gauged to what would be “normally” expected at certain ages. These 30 questions, ranging from following a moving object with one eye, through to defining abstract concepts such as the differences between “boredom” and “weariness,” became the Binet-Simon Scale. Later revisions compared of “mental age” to “chronological age” and others later added the idea of dividing these to form a ratio: a child of 10 with a mental age of 15 would then get a score of 15/10, converted to 150...their “intelligence quotient”: IQ. Somewhere along the line, we also get definitions for terms once clinical and today used only crudely: “moron” (originally an IQ from 50-69), “imbecile” (20-49) and “idiot” (less than 20).

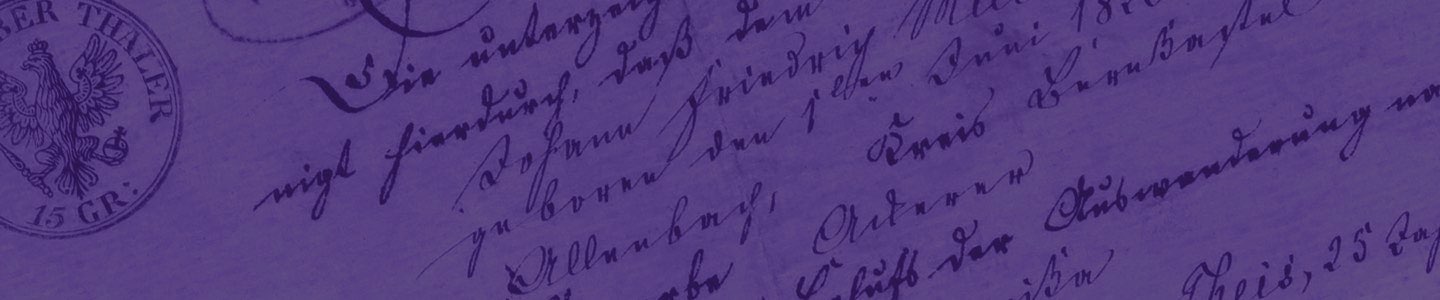

The scale itself was published first in a journal that Binet founded and edited, L’Annee psychologique, in June of 1905, only a few weeks after Mata Hari debuted in Paris, and in the midst of Albert Einstein’s annus mirabilis; that same month he published his major work on special relativity. It didn’t take long for word to spread and the scale to be widely used. It was revised once before Binet’s untimely death in 1911; it then came to the US in 1916 in a translated and edited version by Lewis Termin called the Stanford-Binet Scale, named for the university where he taught. The Stanford-Binet is still used, along with a number of other intelligence tests, one of dozens of standardized tests, part of the multi-billion dollar testing and test-coaching industry.

Binet worked very hard to be rigorous and make his tests and processes as fair as possible, with extensive directions on how to conduct the tests and score responses; he was deeply engaged with the clinical and educational practice of the day, and aware of his method’s limitations, though seemingly also oblivious to the profound influences of background, preparation, and environment. 3 year olds are expected to point to body parts, identify objects, repeat syllables, say their family name; 13 year olds must “correctly” identify differences between a president and a king, and “superior adults” must be able to draw an image of what a folded and cut piece of paper would look like, which I am quite sure I wouldn’t be able to accomplish.

Entire shelves have been written about these tests and industry, both pointing out its perceived unfairness and bias and defending its core assumptions and results. Once you start down this road, though, it’s hard to turn back. If we can measure and quantify something, it must be real, right? Even though we can’t always satisfactorily define or explain what it’s testing, now that there’s a number for it, who’s to question that number’s usefulness, or importance, or validity? And if the lower classes or lesser races get lower scores, ignoring for the moment lots of reasons why that might be the case, well, what can you expect? So now the numbers rule: IQs, SATs, state achievement tests and by extension credit scores, actuarial tables, and on and on.

More recently, broader notions of “intelligence” have been proposed, including the idea of multiple intelligences, linguistic, musical, spatial, interpersonal, and so on, which has a certain appeal—and yet the tests continue. Maybe because of inertia, or maybe because they serve useful purposes.

Or maybe, these tests have ongoing appeal because they just make things so much easier. Think about it; now we can characterize people by using a number or two that seems to say all that needs saying. If that’s your SAT score, you can get into these colleges and you can’t get into those. That bubble you fill in so carefully becomes a pigeonhole. It makes sorting people easier, into the high and the low, the worthy and the not, the ones who can and the ones who can’t. So much simpler than all that messy detail and background and complexity, reducing us all to an easily-located point on the curve, determining your future no matter your past.

References:

Castles, E. E. (2012). Inventing intelligence: how America came to worship IQ. Santa Barbara, Calif: Praeger.

Defending standardized testing. (2005). Mahwah, N.J: L. Erlbaum Associates. Encyclopedia of human intelligence. (1995). New York : London: Simon & Schuster Macmillan ;

Simon & Schuster and Prentice Hall International.

Terman, L. M. (1937). Measuring intelligence: a guide to the administration of the new revised Stanford-Binet tests of intelligence. Boston, New York [etc.]: Houghton Mifflin company.

Wolf, T. H. (1973). Alfred Binet. Chicago: University of Chicago Press.