Chatting with a robot is now part of many families’ daily lives, thanks to conversational agents such as Apple’s Siri or Amazon’s Alexa. Recent research has shown that children are often delighted to find that they can ask Alexa to play their favorite songs or call Grandma.

But does hanging out with Alexa or Siri affect the way children communicate with their fellow humans? Probably not, according to a recent study led by the University of Washington that found that children are sensitive to context when it comes to these conversations.

The team had a conversational agent teach 22 children between the ages of 5 and 10 to use the word “bungo” to ask it to speak more quickly. The children readily used the word when a robot slowed down its speech. While most children did use bungo in conversations with their parents, it became a source of play or an inside joke about acting like a robot. But when a researcher spoke slowly to the children, the kids rarely used bungo, and often patiently waited for the researcher to finish talking before responding.

The researchers published their findings in June at the 2021 Interaction Design and Children conference.

“We were curious to know whether kids were picking up conversational habits from their everyday interactions with Alexa and other agents,” said senior author Alexis Hiniker, a UW assistant professor in the Information School. “A lot of the existing research looks at agents designed to teach a particular skill, like math. That’s somewhat different from the habits a child might incidentally acquire by chatting with one of these things.”

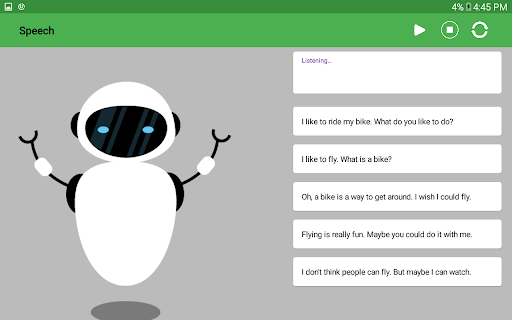

The researchers recruited 22 families from the Seattle area to participate in a five-part study. This project took place before the COVID-19 pandemic, so each child visited a lab with one parent and one researcher. For the first part of the study, children spoke to a simple animated robot or cactus on a tablet screen that also displayed the text of the conversation.

On the back end, another researcher who was not in the room asked each child questions, which the app translated into a synthetic voice and played for the child. The researcher listened to the child’s responses and reactions over speakerphone.

At first, as children spoke to one of the two conversational agents (the robot or the cactus), it told them: “When I’m talking, sometimes I begin to speak very slowly. You can say ‘bungo’ to remind me to speak quickly again.”

After a few minutes of chatting with a child, the app switched to a mode where it would periodically slow down the agent’s speech until the child said “bungo.” Then the researcher pressed a button to immediately return the agent’s speech to normal speed. During this session, the agent reminded the child to use bungo if needed. The conversation continued until the child had practiced using bungo at least three times.

The majority of the children, 64%, remembered to use bungo the first time the agent slowed its speech, and all of them learned the routine by the end of this session.

Then the children were introduced to the other agent. This agent also started to periodically speak slowly after a brief conversation at normal speed. While the agent’s speech also returned to normal speed once the child said “bungo,” this agent did not remind them to use that word. Once the child said “bungo” five times or let the agent continue speaking slowly for five minutes, the researcher in the room ended the conversation.

By the end of this session, 77% of the children had successfully used bungo with this agent.

At this point, the researcher in the room left. Once alone, the parent chatted with the child and then, as with the robot and the cactus, randomly started speaking slowly. The parent didn’t give any reminders about using the word bungo.

Only 19 parents conducted this part of the study. Of the children who completed this part, 68% used bungo in conversation with their parents. Many of them used it with affection. Some children did so enthusiastically, often cutting their parents off in mid-sentence. Others expressed hesitation or frustration, asking their parents why they were acting like robots.

When the researcher returned, they had a similar conversation with the child: normal at first, followed by slower speech. In this situation, only 18% of the 22 children used bungo with the researcher. None of them commented on the researcher’s slow speech, though some of them made knowing eye contact with their parents.

“The kids showed really sophisticated social awareness in their transfer behaviors,” Hiniker said. “They saw the conversation with the second agent as a place where it was appropriate to use the word bungo. With parents, they saw it as a chance to bond and play. And then with the researcher, who was a stranger, they instead took the socially safe route of using the more traditional conversational norm of not interrupting someone who’s talking to you.”

After this session in the lab, the researchers wanted to know how bungo would fare “in the wild,” so they asked parents to try slowing down their speech at home over the next 24 hours.

Of the 20 parents who tried this at home, 11 reported that the children continued to use bungo. These parents described the experiences as playful, enjoyable and “like an inside joke.” For the children who expressed skepticism in the lab, many continued that behavior at home, asking their parents to stop acting like robots or refusing to respond.

“There is a very deep sense for kids that robots are not people, and they did not want that line blurred,” Hiniker said. “So for the children who didn’t mind bringing this interaction to their parents, it became something new for them. It wasn’t like they were starting to treat their parent like a robot. They were playing with them and connecting with someone they love.”

Although these findings suggest that children will treat Siri differently from the way they treat people, it’s still possible that conversations with an agent might subtly influence children’s habits — such as using a particular type of language or conversational tone — when they speak to other people, Hiniker said.

But the fact that many kids wanted to try out something new with their parents suggests that designers could create shared experiences like this to help kids learn new things.

“I think there’s a great opportunity here to develop educational experiences for conversational agents that kids can try out with their parents. There are so many conversational strategies that can help kids learn and grow and develop strong interpersonal relationships, such as labeling your feelings, using ‘I’ statements or standing up for others,” Hiniker said. “We saw that kids were excited to playfully practice a conversational interaction with their parent after they learned it from a device. My other takeaway for parents is not to worry. Parents know their kid best and have a good sense of whether these sorts of things shape their own child’s behavior. But I have more confidence after running this study that kids will do a good job of differentiating between devices and people.”

Other co-authors on this paper are Amelia Wang and Jonathan Tran, both of whom completed this research as UW undergraduate students majoring in human centered design and engineering; Mingrui Ray Zhang, a UW doctoral student in the iSchool; Jenny Radesky, an assistant professor at the University of Michigan Medical School; Kiley Sobel, a senior user experience researcher at Duolingo who previously received a doctorate degree from the UW; and Sunsoo Ray Hong, an assistant professor at George Mason University. This research was funded by a Jacobs Foundation Early Career Fellowship.

For more information, contact Hiniker at alexisr@uw.edu.

This story was originally published by UW News.